Keynote Speakers

Keynote Talks

Title: Training A Large Language Model from Scratch – What does it take?

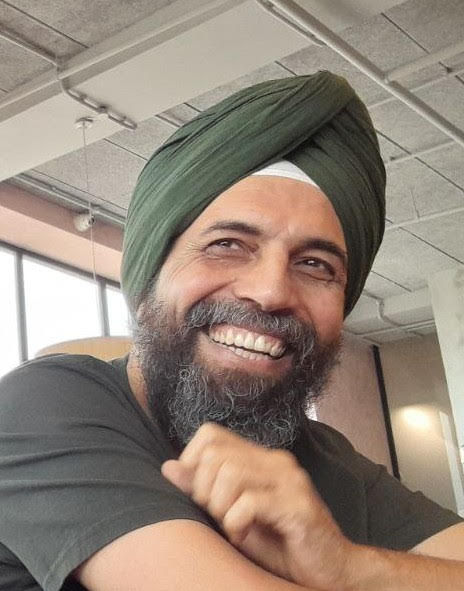

Speaker: Dr. Pratyush Kumar, Assistant Professor, Computer Science and Engineering, Indian Institute of Technology Madras and Co-Founder Sarvam AI

Time: 18th December, 2025

Abstract: In this talk, I will discuss our experiences in setting up the compute and data infrastructure, and learnings as we trained large language models from scratch.

Speaker Bio: Dr. Pratyush Kumar is the Co-founder of Sarvam and a leading voice in India’s AI ecosystem. A two-time founder, he previously built AI4Bharat and OneFourth Labs, both instrumental in advancing open-source AI for Indian languages. AI conferences and journals. Prior to founding Sarvam, Dr. Kumar was a researcher at Microsoft Research and IBM, where he worked on cutting-edge problems in machine learning and natural language processing. He has published over 89 research papers at top-tier conferences and journals, contributing to both academic and applied advances in the field. Dr. Kumar holds degrees from IIT Bombay and ETH Zurich and continues to build AI that reaches every corner of the country.

Title: High Performance Scientific Computing in India: Past, Present and Future

Speaker: Dr. Jasjeet Singh Bagla, Professor, Department of Physics, Indian Institute of Science Education and Research, Mohali

Time: 19th December, 2025

Abstract: I will review the initiation and evolution of high performance scientific computing in academia in India. I will then go on to discuss the problems being faced by the scientific community and possible solutions as we look to upscale to exa-scale computing and routine use of AI/ML in scientific applications. AI/ML/LLMs are expected to play an important role in scientific computing as we try to overcome the limitations of standard approaches for highly complex problems. In particular I will discuss the factors that often determine effective usage of facilities by the larger community: easy to use setup, maintenance, documentation, support and training. Another factor that should determine the approach we take for the future is access to top of the line facilities. I will try to make a case for possible solutions that take these factors into consideration.

Speaker Bio: Jasjeet Singh Bagla is a physicist whose research interests are in the area of astronomy and cosmology. His higher education is from Delhi University and he did his PhD at the Inter-University Centre for Astronomy and Astrophysics (IUCAA) in Pune. After post-doctoral stints at the University of Cambridge, UK and at the Harvard-Smithsonian Center for Astrophysics, he joined the Harish-Chandra Research Institute, Allahabad as a faculty member. His love for teaching and mentoring young students brought him to IISER Mohali in 2010 and he has been working here since then. He has mentored a number of students and interns.

Title: A Case for Scale-out AI

Speaker: Dr. Christos Kozyrakis, Professor, Electrical Engineering and Computer Science, Stanford University & NVIDIA Research

Time: 20th December, 2025

Abstract: AI workloads, both training and inference, are now driving datacenter infrastructure development. With the rise of large foundational models, the prevalent approach to AI systems has mirrored that of supercomputing. We are designing hardware and software systems for large, synchronous HPC jobs with significant emphasis on kernel optimization for linear algebra operations. This talk will argue that AI infrastructure should instead adopt a scale-out systems approach. We will review motivating examples across the hardware/software interface and discuss opportunities for further improvements in scale-out AI systems for both training and agentic reasoning.

Speaker Bio: Christos Kozyrakis is a computer architecture researcher at NVIDIA and the Leonard Bosack and Sandy K. Lerner Professor of Electrical Engineering and Computer Science at Stanford University. His research focuses on cloud computing technology, hardware and software systems design for artificial intelligence, and artificial intelligence for systems design. Christos holds a PhD from UC Berkeley and a BS from the University of Crete (Greece), both in Computer Science. He is a fellow of the ACM and the IEEE. He has received the ACM SIGARCH Maurice Wilkes Award, the ISCA Influential Paper Award, the ASPLOS Influential Paper Award, the SoCC Test of Time Award, the NSF Career Award, the Okawa Foundation Research Grant, and faculty awards by IBM, Microsoft, and Google.

HiPC 2026 is the 33rd edition of the IEEE International Conference on High Performance Computing, Data, and Analytics. It will be an in-person event in Bengaluru, India, from December 16 to December 19, 2026.

Follow us on:

IEEE Conduct and Safety Statement

IEEE believes that science, technology, and engineering are fundamental human activities, for which openness, international collaboration, and the free flow of talent and ideas are essential. Its meetings, conferences, and other events seek to enable engaging, thought provoking conversations that support IEEE’s core mission of advancing technology for humanity. Accordingly, IEEE is committed to providing a safe, productive, and welcoming environment to all participants, including staff and vendors, at IEEE-related events

IEEE has no tolerance for discrimination, harassment, or bullying in any form at IEEE-related events. All participants have the right to pursue shared interests without harassment or discrimination in an environment that supports diversity and inclusion.

Participants are expected to adhere to these principles and respect the rights of others. IEEE seeks to provide a secure environment at its events. Participants should report any behavior inconsistent with the principles outlined here, to on site staff, security or venue personnel, or to [email protected].

IEEE Computer Society Open Conference Statement

Expanding participation in computing is central to the goals of the IEEE Computer Society and all of its conferences. The IEEE Computer Society is firmly committed to broad participation in all sponsored activities, including but not limited to, technical communities, steering committees, conference organizations, standards committees, and ad hoc committees that welcome the entire global community.

IEEE’s mission to foster technological innovation and excellence to benefit humanity requires the talents and perspectives of people with many disciplinary backgrounds.

All individuals are entitled to participate in any IEEE Computer Society activity free of discrimination and harassment.